How Do Search Engines Work: 4 Important Functions

There is a very important communication platform between your target audience and your website: search engines. They enable users to find your website by searching for specific keywords, thus enabling the flow of visitors to you.

But how exactly does this process, which we always know “the last step” of, works very well? How exactly does your website is crawled, indexed, rendered, and ranked on certain keywords?

If you want to have information about SEO, if you want to strengthen the rankings of your website with strong SEO studies, you must first understand the working logic of Search Engines.

So let’s start with this detailed guide prepared by Screpy experts!

Try for free to boost your website traffic!

What Do Search Engines Do? 4 Function of One Actor!

A basic search engine performs 4 basic actions to develop a higher user experience (UX) by presenting the most powerful websites to its users.

These can be listed as follows:

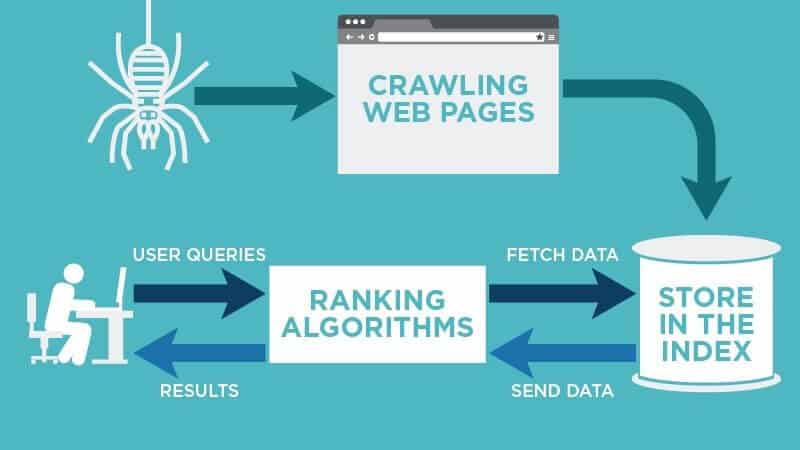

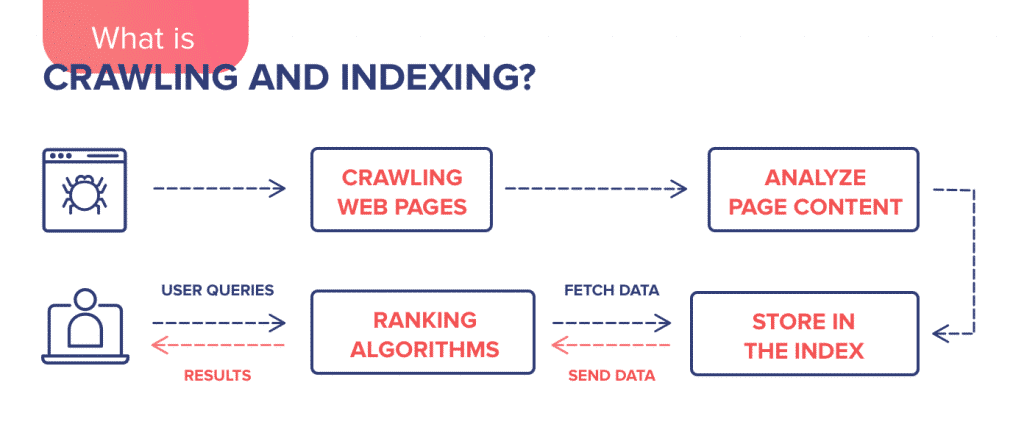

Crawling

Digital software that provides web crawling, defined as robots, spiders, or bots, create a virtual experience by traveling between the links on your site (they actually jump from one page to another via links that bind them).

Through this experience, they will examine your website. Bots that navigate from one link on your website to another can learn about the user experience offered by a specific web page.

Indexing

The job of storing data on a web page is called indexing. A bot comes to your website and crawls it. Then the contents, links, and much more on this page are cataloged, which means they are indexed by the search engine. As you can imagine, this will require a very powerful computing resource.

Rendering

All content prepared to be published in the digital world on a web page is stored as HTML, CSS, and Javascript files. The browser’s task is to read and interpret these files by analyzing the relevant languages (the code languages). When these codes are converted into a web page, the browser interprets the files in front of the user. HTML or Javascript files with high load require a high loading process. In the modern era, it is recommended to save many links as HTML files.

Ranking

A search engine aims to show the users the result most relevant to the keyword they are searching for, thereby increasing the user experience. To do this, they rank the web pages in terms of the content they offer, their speed within the site, and other features that affect the user experience. This ranking shows how much more visible web pages are in related keywords than their competitors and directly affects visitor traffic. Results that offer the most relevant and successful performance are shown higher in search engines.

Are Search Engines Aware of Your Website?

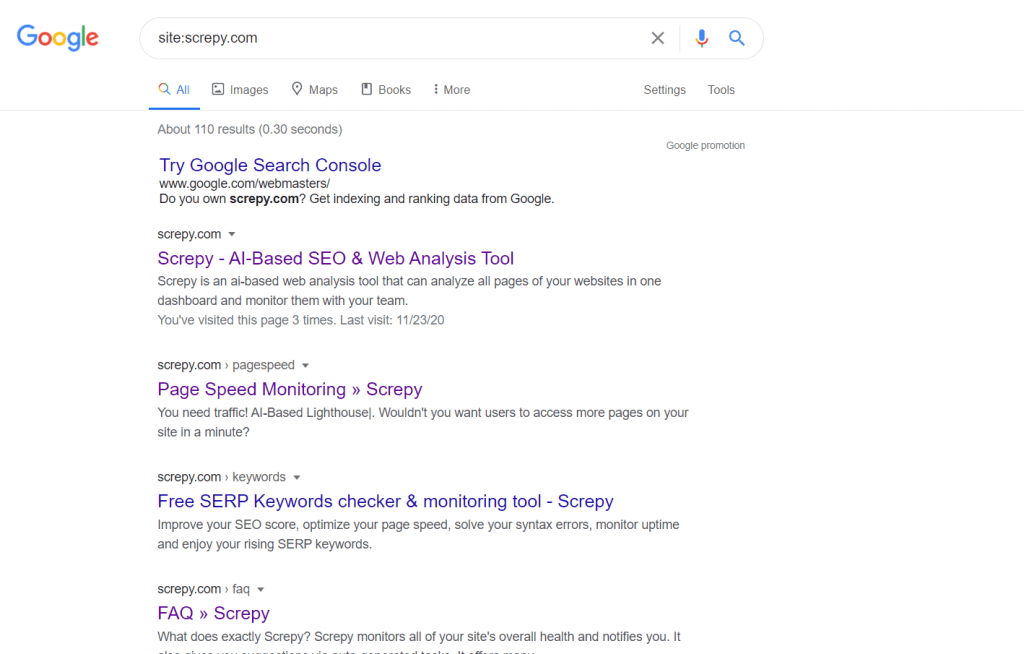

If you want to appear in the SERP in related searches, you must first make sure that search engines can find your site. In addition to the pages that need to be crawled and indexed on a website, there may be pages that you do not want to be indexed. How will you know which pages search engines see and which pages don’t?

There is no indexing without crawling, we got that now, right? So, pages that are not indexed by search engines are actually pages that give errors during crawling.

The best way to do a free test on this subject is to type “site:domainname.com” in the search engine and then click enter. The results you see are all pages on your site in the search engine. If there is no web page here, it means that Google (or whichever search engine you use) isn’t seeing it. In this case, it may make sense to examine the source code of this web page and see if the page has render-blocking features.

As you can see in the picture above, you can also use Google Search Console to analyze which of your pages are indexed and which of does aren’t.

Possible Reasons for Your Website Not Visible

So, if a page on your website is not visible, what are the reasons for this? Hey, detailed scans with Screpy can help you do code optimizations, which also called technical SEO. Because Screpy examines the source code of each of your web pages and alerts you to the problems. You can examine how to fix these warnings through tasks – and if you improve them, Screpy AI predicts what kind of score increase they will have.

Anyway, if your web pages are not indexed, you may be experiencing one of the following problems:

- Could this page on your website be too new? If it’s new, your page might not be crawled yet, but that’s not a problem. Google bots will get there as soon as possible.

- If your site is not linked through any external pages, the page could be something like a ghost! Even linking to your own website from your social media accounts can be a good method to improve its ”visibility”.

- A type of code might be preventing search engines from crawling your website. Crawler directives often do this.

- If your website has been flagged as “spam” by Google bots, there is a big problem.

What About Robot.txt Files?

Not indexing any page on your site may be caused by robot.txt files. Errors in these files damage the crawling process and various problems occur. The main behavior of the Googlebots toward your website’s robot.txt files is as follows:

If Googlebots cannot find such a file on your site, your site will continue to be crawled. If there is such a file, the site will continue to be scanned unless otherwise stated. However, even though there is a robot.txt file, if there are problems with accessing this file and errors occur, Googlebots may stop crawling your site. This may cause many pages not to be indexed.

Why Does Crawling, Rendering and Indexing Matters?

In this content, we talked about four concepts in total: crawling, rendering, indexing, and finally ranking. In fact, the first three of these are of serious importance over the fourth. If you do the following, you can make Google crawl every page of your website and rank it at the top if you have enough quality content. This is the most important rule of SEO, which we call search engine optimization: Your website will be more visible and you will be visible to users before your competitors without having to spend a high budget for SEM. Your traffic and recognition will increase.

If Google comes across unnecessary code, low-quality pages, or errors with a lot of different codes during your website’s crawling process, it will think that the user experience you will offer is low and that you are not a high-quality website. This situation causes Google not to bring you to the position you want in the ranking even if it indexes you. So search rankings are about all of these, and much more than them!

Test Your Website Issues

You can quickly analyze your site